From GDPR to AI Regulations: The Next Big Shift in Data Privacy

Summary

Businesses must prepare by updating policies, auditing AI decisions, and ensuring compliance. The WPLP Compliance Platform provides AI-ready legal templates to help organizations stay audit-ready, avoid fines, and build trust.

Since the enforcement of the General Data Protection Regulation (GDPR) in 2018, it has changed the global data privacy standards.

It established strict rules that every organization must follow when collecting, using, or storing personal data.

This included businesses all around the world having to develop a compliance program to meet the GDPR demands.

With the rapid advancement of artificial intelligence (AI), new challenges are emerging. AI systems, from chatbots to predictive analytics, require large amounts of data and generate decisions about individuals according to a logic that affects individuals in ways old privacy laws never expected.

This is why regulators are moving toward AI-specific rules, most notably the EU AI Act, which aims to ensure accountability, fairness, and transparency in AI systems.

In this article, we will explore how privacy laws are now moving from GDPR to AI regulations.

Will discuss what this means to organizations and how the WPLP Compliance Platform can help organizations remain audit-ready.

From GDPR to AI regulations: A Quick Recap of Privacy Evolution

The GDPR was a turning point in data privacy laws.

The European Union implemented the General Data Protection Regulation (GDPR) as a law related to data privacy in May 2018.

Its objective was to give individuals more control over their data and make organizations responsible for how they collect, use, and store this data. GDPR not only applies to businesses in the EU but also affects companies all over the world that have access to the data of EU citizens.

GDPR requires an organization to have a user click consent or some other method to give consent to the organization to collect personal information clearly.

Transparency of data practices is a key requirement of GDPR. Individuals are given essential rights, such as the rights to access, correct, erase, and transfer their data.

Non-compliance with GDPR can result in significant penalties, making these laws some of the strictest and most wide-ranging privacy laws in the world.

However, GDPR wasn’t designed with today’s AI-powered world in mind. AI introduces risks that GDPR only partially addresses, such as:

- Automated decision-making (loans, hiring, medical diagnostics).

- Profiling based on behavior or biometric data.

- Opaque algorithms where users can’t understand how decisions are made.

As AI grows more advanced and widely used, regulators face new challenges.

How do you oversee algorithms that act like a “black box,” making decisions such as who gets hired? What happens when AI is trained on massive datasets taken from the internet without consent?

To address these issues, a new wave of AI-specific regulations is emerging, aimed at ensuring AI is developed and used safely, transparently, and ethically.

Now let’s move from GDPR to AI regulations and see what is coming next.

AI Regulations: What’s Coming Next

The European Union is once again showing its leadership with the EU AI Act. It is the first comprehensive global framework for AI. Its phased implementation means some requirements may come into effect later (2025–2027). And it aligns with international standards like ISO/IEC 42002:2023 for AI management systems.

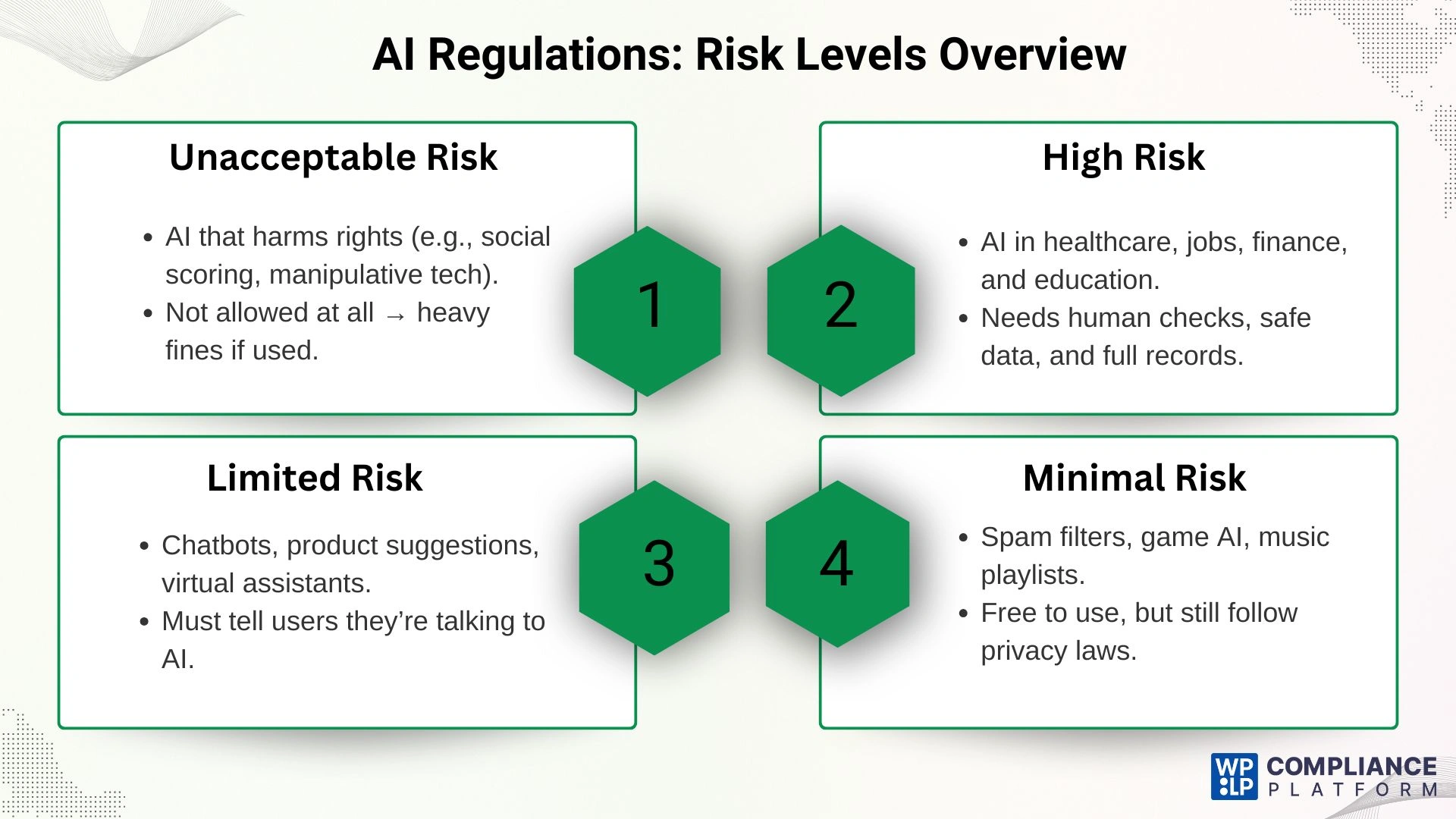

It is categorised into four levels.

1. Unacceptable Risk AI

Examples:

- Social scoring systems (rating citizens based on behavior, like in China).

- AI is influencing people without their knowledge.

- Real-time biometric surveillance in public places (with narrow exceptions for law enforcement).

Rules: These uses are considered a clear danger to human rights and are banned outright in the EU.

What it means for businesses: You cannot deploy or sell these AI systems in the EU market. Doing so could result in severe fines and bans.

2. High-Risk AI

Examples: AI in healthcare, finance, employment, and education.

Rules: These systems must meet strict requirements because they have a direct impact on people’s lives and rights. Obligations include using high-quality, unbiased data to train the model.

There should be human oversight, which means AI cannot operate unchecked. There should be a detailed log of how decisions are made and risk assessments before deployment.

What it means for businesses: Expect compliance requirements similar to GDPR but focused on fairness, accountability, and AI transparency requirements.

3. Limited Risk AI

Examples: Customer service chatbots, AI-generated product recommendations, and virtual assistants.

Rules: Transparency is required. For example, if a user is chatting with an AI bot, they must be told it’s an AI, not a human.

What it means for businesses: You don’t need heavy compliance processes, but you must clearly disclose AI usage.

4. Minimal Risk AI

Example: Spam filters, video game AI, recommendation engines for music playlists.

Rules: These applications are considered safe because they don’t directly impact people’s rights, safety, or opportunities.

What it means for businesses: Minimal or no regulatory obligations. You can use them freely, but still follow general laws like GDPR if personal data is involved.

The EU is not alone. Other regions are actively working on AI regulations and data privacy.

- United States: Exploring AI accountability frameworks and state-level rules.

- United Kingdom: Pushing for pro-innovation AI regulation.

- Canada: Developing the Artificial Intelligence and Data Act (AIDA).

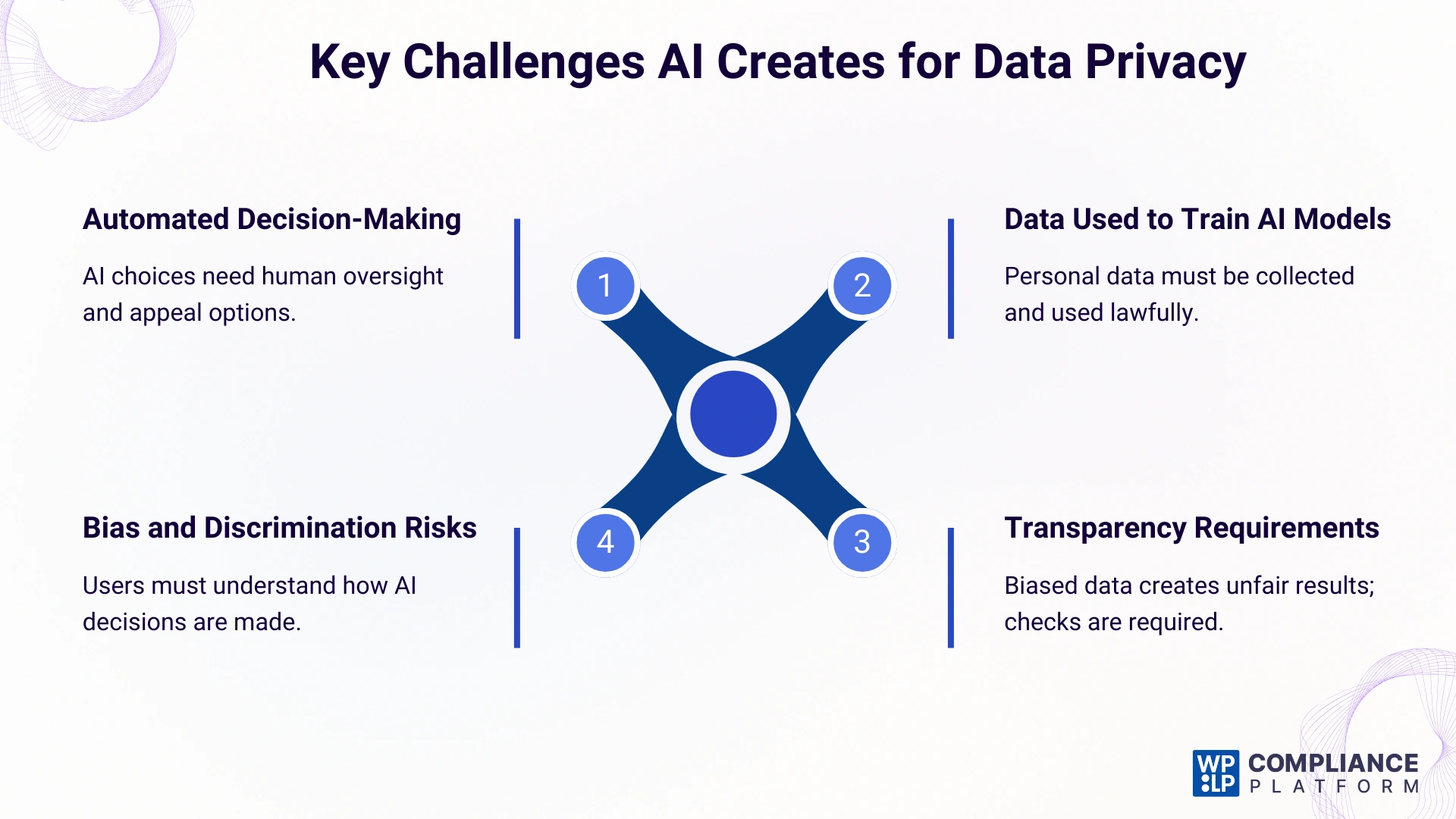

The key challenges AI creates for data privacy.

- Automated Decision-Making: AI systems can automatically make decisions from credit approvals to job application screenings. New regulations require that AI decisions have human oversight, and individuals can appeal those decisions.

- Data Used to Train AI Models: The data used to train AI can include personal information, and the regulations are deciding how to ensure the data was lawfully collected and used.

- Bias and Discrimination Risks: If AI is trained on biased data, it will produce biased and unfair results. New laws will require businesses to check for bias and take steps to reduce it regularly.

- Transparency Requirements: Unlike a traditional website, it typically isn’t clear how an AI comes to a conclusion. New regulations will require a level of explainability where the user understands how an AI’s decision was made.

What Businesses Need to Prepare For

Shifting from GDPR to AI regulations requires a strategic approach. Waiting for a violation to happen is not an option. If you do so, financial and reputational damages can be severe.

Penalties for non-compliance can reach up to €35 million or 7% of a company’s total worldwide annual turnover in the EU AI Act, which is a very high amount.

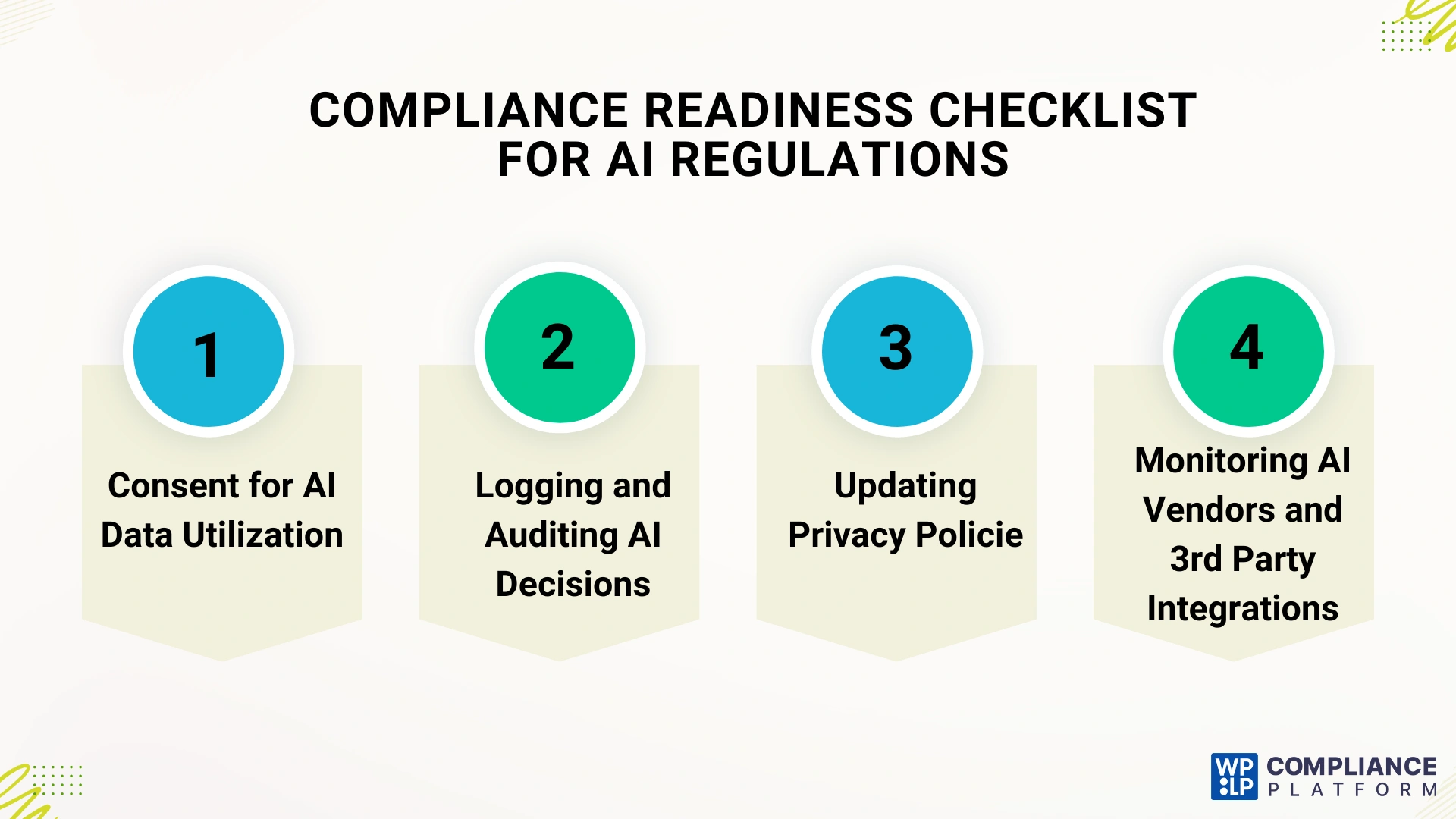

We have written down a compliance checklist to help you comply with the law.

Compliance Readiness Checklist:

- Consent for AI Data Utilization: Your current consent management system may be sufficient for cookies, but does it refer to the data being used for training AI? You need to ensure that you have a clear legal basis for using people’s personal data as training data for AI models, particularly for high-risk AI applications.

- Logging and Auditing AI Decisions: New regulations will likely have an audit trail for high-risk AI systems. Can you document how a decision was made? You need a system that can log AI inputs, outputs, and the logic behind essential choices so that you can demonstrate accountability.

- Updating Privacy Policies: You need to update your existing privacy policy to properly explain how AI is being used, what data AI is processing, and what rights individuals will have as a result of these systems. You also need to maintain transparency with individuals regarding automated decisions and profiling as required by the legislation.

- Monitoring AI Vendors and 3rd Party Integrations: The risk does not end with your own AI systems, but you are also responsible for the AI tools and services you use with 3rd party vendors. Ensure that you verify their systems to confirm they are compliant and include necessary transparency.

How WPLP Compliance Platform Helps

Most of the businesses still think of compliance as a cookie consent banner. But that is not enough.

WPLP Compliance Platform goes beyond cookie banners, providing the tools you need to not only meet GDPR requirements but also prepare for the AI-driven regulatory era.

With the increasing use of AI in customer interactions, analytics, and decision-making, it’s now mandatory to update these policies with clear AI usage disclosures.

The WPLP Compliance Platform simplifies this process.

Instead of trying to write your own policies for each critical area of compliance, you get access to professionally written and usable legal templates that are customizable.

Here’s what the WPLP Compliance Platform covers for each compliance area.

Following legal pages that contain clauses related to AI.

- Professional Privacy Policy: Covers AI data processes, user rights, and transparency so your customers understand how AI impacts them.

- Terms and Conditions: Establish fair usage, liability, and business practices, ensuring your customers are using the terms as they relate to how you run your AI-driven automated operation.

- DMCA Policy: This protects your website from copyright claims and explains how content ownership and violations need to be reported.

- General Disclaimer: Protects your company from potential legal liability by establishing limits to your business responsibility, including decisions that were based on AI tools.

- Earnings Disclaimer: Crucial for any Company selling financial advice, courses, or marketing services to indicate that results may vary.

- COPPA Policy: Ensure compliance whenever your website or service collects data in the United States from children less than 13 years of age.

- Cookies Policy: This outlines what data is collected from cookies, which includes tracking technology that is derived from AI or machine learning.

This AI Regulation is important because, with the emergence of the EU AI Act and other similar laws, there is a growing demand for transparency regarding AI compliance for businesses. This means your privacy policy and disclaimers need to clearly mention how AI is being utilized for chatbots, credit scoring, customized recommendations, etc.

By utilizing WPLP, you get more than just generic templates. You get AI-ready policies that incorporate the above increasing requirements to help you:

- Avoid compliance gaps that could result in fines.

- Promote customer trust by being truly transparent in how you are utilizing AI.

- And ensure future-proofness as compliance regulations evolve.

Future Outlook: The Next Decade of Data Privacy

The next decade of data privacy will shift from focusing mainly on data consent to emphasizing accountability and transparency. GDPR began this journey by giving individuals more control over their personal information.

Now, upcoming AI regulations will take it further by holding businesses responsible for the results and social impact of their AI systems.

Businesses that adapt to this change and invest in strong, future-ready compliance platforms will gain a significant competitive edge.

They will earn trust from their customers and avoid the high costs of penalties and reputational harm caused by non-compliance. The best time to prepare for this new era is now.

Frequently Asked Questions

GDPR aims to protect people’s personal data. The EU AI Act is similar to it but looks at whether AI systems are safe and ethical based on their level of risk. In some cases, the regulations serve as a framework to complement each other, and in other cases, they are competing regulations.

Yes. If the AI system processes personal data, then both AI and GDPR regulations apply. Organizations will have to use both compliance frameworks at the same time.

The EU AI Act compliance is a regulatory compliance that groups AI systems by type of risk and attaches a number of obligations to the providers and users of those AI systems. The regulation took effect in August 2024, with a phased implementation over the next few years.

Companies should begin the process by creating a complete inventory of their AI systems or usage. Then they should evaluate each system, review their data governance and privacy policies, and install technical solutions to log, audit, and provide transparency.

Penalties differ by region, but under the EU AI Act, they are tiered and can be even higher than GDPR fines. Depending on how severe the violation is, businesses may face fines of tens of millions of euros or a percentage of their global annual revenue.

Conclusion

The regulatory structure is changing. Compliance is moving beyond GDPR, with AI regulations becoming the primary focus. This shift is not only a legal requirement but also a key strategic priority for businesses.

Businesses must move from a reactive, GDPR-only mindset to a proactive, integrated compliance strategy that addresses the unique risks associated with AI.

By developing your focus on accountability, transparency, and data governance now, you can put your business in a better position for future success.

Platforms, like WPLP, are designed to give you everything you need. It provides you with tools and the flexibility to prepare yourself for every new piece of compliance regulation.

Disclaimer: This blog post is for informational purposes only and does not constitute legal advice. Businesses should consult with legal counsel to ensure full compliance with all applicable data privacy and AI regulations.

If you like this article, consider reading.

- Avoid Dark Patterns Cookie Banners: Honest and Ethical Design for Compliance

- Most Common Privacy Policy Issues To Avoid

- What is a Data Breach and How to Prevent It?

Ensure your AI usage is compliant. The WPLP Compliance Platform provides templates and tools for audit readiness.